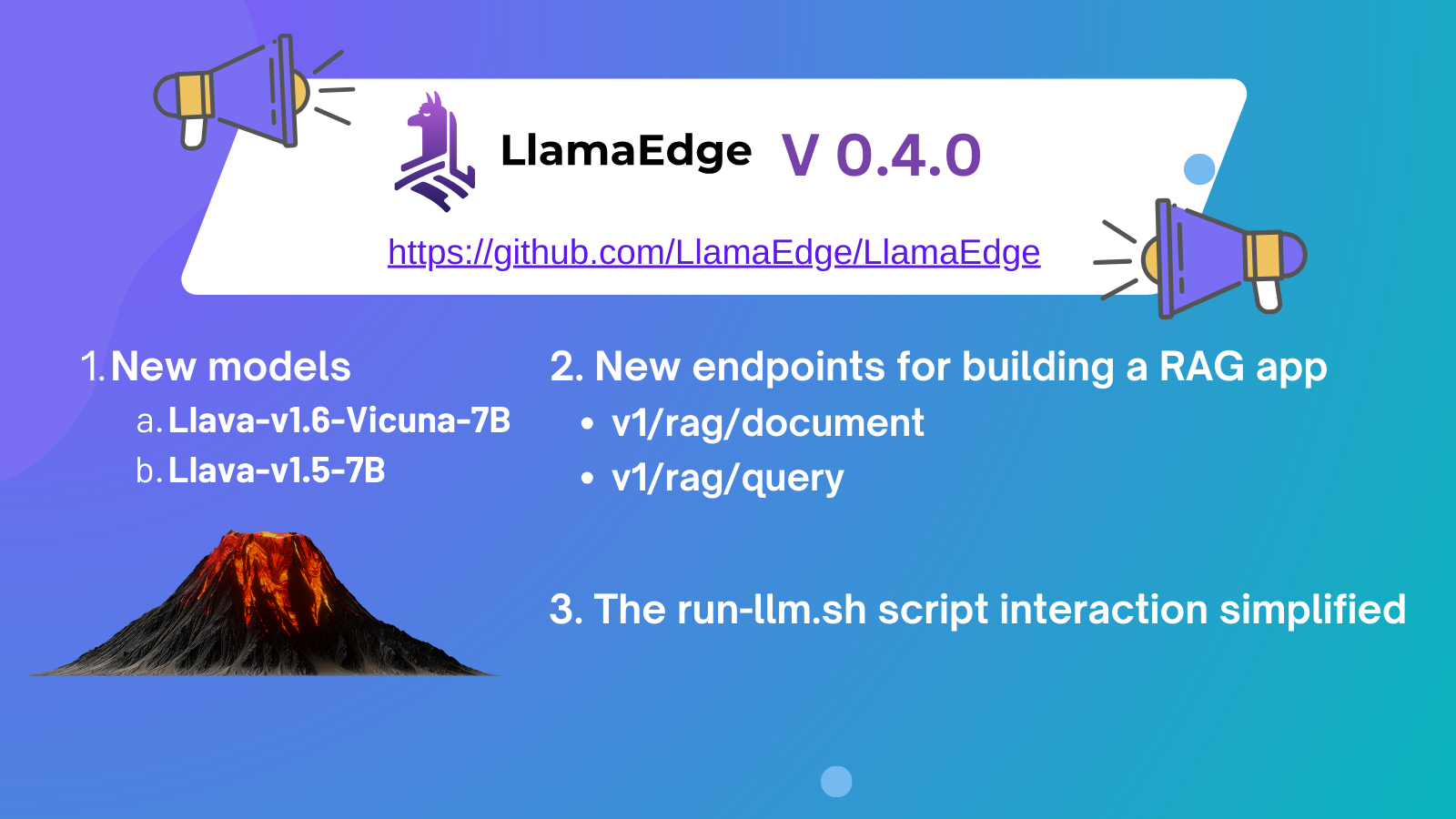

LlamaEdge v0.4.0 is out! Key enhancements:

- Support the Llava series of VLMs (Visual Language Models), including Llava 1.5 and Llava 1.6

- Support RAG services (i.e., OpenAI Assistants API) in the LlamaEdge API server

- Simplify the

run-llm.shscript interactions to improve the onboarding experience for new users

Support Llava series of multimodal models

Llava is an open-source Visual Language Model (VLM). It supports multi-modal conversations, where the user can insert an image into a conversation and have the model answer questions based on the image. The Llava team released LLaVA-NeXT (Llava 1.6) in Jan 2024, and claimed that it beats Gemini Pro on several benchmarks.

LlamaEdge 0.4.0 supports inference applications built on both Llava 1.5 and Llava 1.6. Check out the article Getting Started with Llava-v1.6-Vicuna-7B. Currently, each conversation can only contain one image due to Llava model limitations. You should start the conversation with an uploaded image and then chat with the model about the image.

Build “Assistant API” apps with LlamaEdge

The OpenAI Assistant API allows users to import their own documents and proprietary knowledge so that the LLM can answer domain-specific questions more accurately. The technique to supplement external documents to the LLM is commonly known as RAG (Retrieval-Augmented Generation). The LlamaEdge API server llama-api-server.wasm now provides a pair of new endpoints for developers to easily build RAG applications.

- The

/v1/rag/documentendpoint chunks and converts an input document into embeddings and persists the embeddings. - The

/v1/rag/queryendpoint answers a user query using context from the saved embeddings.

The above endpoints take a connection URL to a Qdrant database server as part of the request. The Qdrant database stores and retrieves vector embeddings for the external document or knowledge. The embeddings are generated using the LLM started by the llama-api-server.wasm API server.

Simplify the run-llm.sh script interactions

We are continuously improving the run-llm.sh script based on user feedback. One of the most popular requests is to make it easier for new users who want to get a chatbot up and running as soon as possible. Now, the script will choose a sensible set of default values for you if you run it without any argument.

bash <(curl -sSfL 'https://code.flows.network/webhook/iwYN1SdN3AmPgR5ao5Gt/run-llm.sh')

It will download and start the Gemma-2b model automatically. Open http://127.0.0.1:8080 in your browser and start chatting right away!

If you would like to specify an LLM to download and run, you can give the model name in the --model argument. The following command starts a web-based chatbot using the popular Llama2-7b-chat model.

bash <(curl -sSfL 'https://code.flows.network/webhook/iwYN1SdN3AmPgR5ao5Gt/run-llm.sh') --model llama-2-7b-chat

We also support the following model names in the argument. If there are models you would like to support, please create a PR to add your favorite one.

- gemma-2b-it

- stablelm-2-zephyr-1.6b

- openchat-3.5-0106

- yi-34b-chat

- yi-34bx2-moe-60b

- deepseek-llm-7b-chat

- deepseek-coder-6.7b-instruct

- mistral-7b-instruct-v0.2

- dolphin-2.6-mistral-7b

- orca-2-13b

- tinyllama-1.1b-chat-v1.0

- solar-10.7b-instruct-v1.0

Finally, you can interactively choose and confirm all steps in the script using the --interactive flag.

bash <(curl -sSfL 'https://code.flows.network/webhook/iwYN1SdN3AmPgR5ao5Gt/run-llm.sh') --interactive

That’s it for now. As always, we love to hear your comments and feedback.

Discord

Discord