Llava-v1.6-Vicuna-7B is open-source community's answer to OpenAI's multimodal GPT-4-V. It is also known as a Visual Language Model for its ability to handle visual images and language in a conversation. The model is based on lmsys/vicuna-7b-v1.5.

In this article, we will cover how to create an OpenAI-compatible API service for Llava-v1.6-Vicuna-7B.

We will use LlamaEdge (the Rust + Wasm stack) to develop and deploy applications for this model. There is no complex Python packages or C++ toolchains to install! See why we choose this tech stack.

(LlamaEdge has just released version 0.4.0, supporting Llava series of models)

Run Llava-v1.6-Vicuna-7B on your own device

Step 1: Install WasmEdge via the following command line.

curl -sSf https://raw.githubusercontent.com/WasmEdge/WasmEdge/master/utils/install.sh | bash -s -- -v 0.13.5 --plugins wasi_nn-ggml wasmedge_rustls

Step 2: Download the Llava-v1.6-Vicuna-7B model GGUF and mmproj-model-f16.gguf files. Since the size of the model is 4.78 G, so it could take a while to download.

curl -LO https://huggingface.co/second-state/Llava-v1.6-Vicuna-7B-GGUF/resolve/main/llava-v1.6-vicuna-7b-Q5_K_M.gguf

curl -LO https://huggingface.co/second-state/Llava-v1.6-Vicuna-7B-GGUF/resolve/main/llava-v1.6-vicuna-7b-mmproj-model-f16.gguf

Download the API server app. It is a cross-platform portable Wasm app that can run across CPU and GPU devices.

curl -LO https://github.com/LlamaEdge/LlamaEdge/releases/latest/download/llama-api-server.wasm

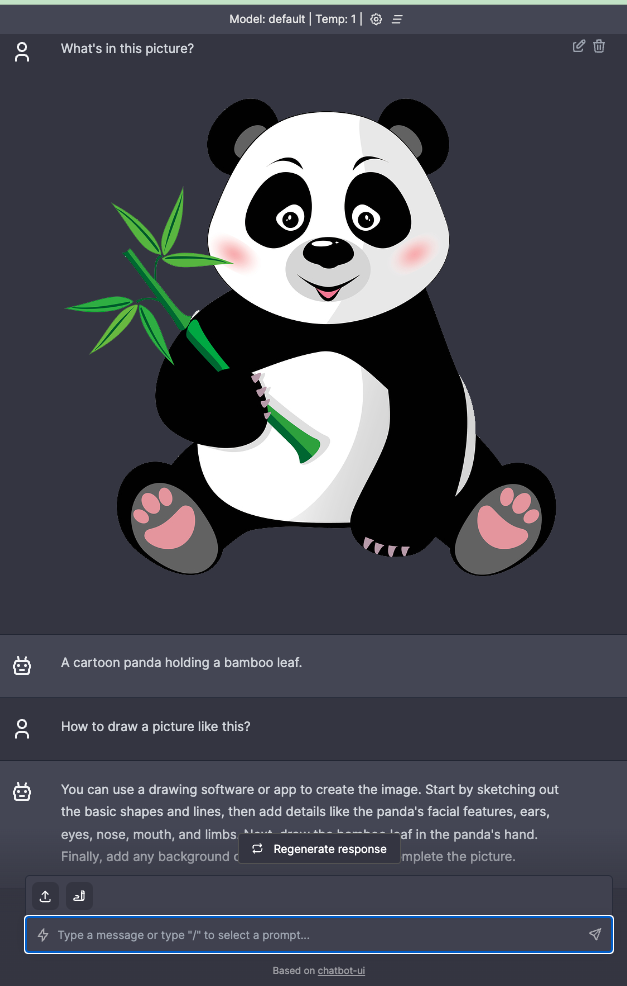

Then, download the chatbot web UI to interact with the model with a chatbot UI.

curl -LO https://github.com/LlamaEdge/chatbot-ui/releases/latest/download/chatbot-ui.tar.gz

tar xzf chatbot-ui.tar.gz

rm chatbot-ui.tar.gz

Next, use the following command lines to start an API server for the model. Then, open your browser to http://localhost:8080. Enter an image url and start the chat!

wasmedge --dir .:. --nn-preload default:GGML:AUTO:llava-v1.6-vicuna-7b-Q5_K_M.gguf llama-api-server.wasm -p vicuna-llava -c 4096 --llava-mmproj llava-v1.6-vicuna-7b-mmproj-model-f16.gguf -m llava-v1.6-vicuna-7b

From another terminal, you can interact with the API server using curl.

curl -X POST http://localhost:8080/v1/chat/completions \

-H 'accept:application/json' \

-H 'Content-Type: application/json' \

-d '{"messages": [{"content": [{"type": "text","text": "what is in the picture?"},{"type": "image_url","image_url": {"url": "https://petweb1-1253856731.cos.ap-beijing.myqcloud.com/uploads/20200405/6944322520e28e9f3c497df873cdcd0b.jpg"}}],"role": "user"}],"model": "llava-v1.5"}'

That’s all. WasmEdge is easiest, fastest, and safest way to run LLM applications. Give it a try!

Talk to us!

Join the WasmEdge discord to ask questions and share insights.

Any questions getting this model running? Please go to second-state/LlamaEdge to raise an issue or book a demo with us to enjoy your own LLMs across devices!

Discord

Discord