Python is for humans. Rust is for machines. Which future do you choose?

TL;DR Claude Code + Opus 4.5 vibe coded a Rust implementation of the newly released Qwen3 TTS models. The Rust compiler catches syntax, memory, thread, and other low-level bugs. The Claude AI created its own evaluation tools to ensure functional correctness. Those feedback loops enabled the agent to work for extended periods and deliver correct Rust programs without human intervention.

The Qwen team recently unleashed Qwen3-TTS, an open-source, SOTA Text-to-Speech model that's turning heads. At its heart is a neural audio codec that punches way above its weight class: a tiny 0.6B parameter model delivering the highest perceptual quality scores in the industry.

Speed? Ridiculous. Qwen3-TTS clocks in at a 97-millisecond first-token latency. Type a single character and audio starts streaming almost before you lift your finger. And the voice control capabilities are borderline magical. You can design voices using plain English descriptions or clone any voice from just three seconds of audio.

For us, this release hit different. Our open-source voice AI device, EchoKit, leans hard on TTS services. Qwen3-TTS’ combination of blazing-low latency, stellar quality, effortless cloning, and compact size? That's the sweet spot for real-time voice. A self-hosted, open-source solution running on users’ own Olares ONE, DGX Spark, or Mac Minis would be a massive win, both for privacy and cost.

But there was a catch.

Rust is the language of AGI

The Qwen3-TTS inference software is written in Python. That means dragging along 5GB+ of dependencies, wrestling with version conflicts, waiting through 5-second startup times, and juggling virtual environments. All that overhead … just to run TTS? I'd rather not.

Much of modern ML engineering is making Python not be your bottleneck.

— Greg Brockman (@gdb) July 5, 2023

The entire EchoKit stack, from firmware to WebSocket server, is Rust. Compact, blazing-fast binaries with minimal external baggage. That's the standard we wanted for our Qwen3-TTS inference application. And we're not alone. Leading organizations across the industry are building AI infrastructure in Rust for exactly these reasons.

Rust

— Elon Musk (@elonmusk) April 22, 2023

Rust is great for vibe coding

The Rust compiler catches 90% of AI hallucinations. If the AI invents something that did not exist or deviates from best practices on memory / thread safety, the compiler will not let it pass. It even gives the AI detailed error messages on how to fix the problem. That is very well suited for today's coding AI — it provides an excellent reward function for the AI to self-improve.

rust is a perfect language for agents, given that if it compiles it's ~correct

— Greg Brockman (@gdb) January 2, 2026

But what about functional bugs? The code compiles but the logic is wrong. Human developers often hallucinate and make these mistakes too. Claude AI has an ingenious solution! It created its own tools, using Qwen3-TTS’ original Python package in this case, to cross check the Rust code and ensure functional correctness.

The two inter-connected flywheels, created by the Rust compiler and the coding AI, are what makes Rust great in agentic / vibe coding.

The work

Still, porting Qwen3-TTS to Rust wasn't going to be a walk in the park. The model uses a novel architecture: an end-to-end discrete multi-codebook design. Traditional TTS systems chain together a language model and a diffusion model, creating information bottlenecks and cascading errors along the way. Qwen3-TTS innovates, generating audio directly from text in one unified process. The payoff? Higher quality, better efficiency, and unprecedented control. But it also meant the inference application had to handle this entirely new architecture.

With the team stretched thin, I decided to tackle it myself. Me and Claude Code, locked down during the winter storm in Austin, Texas.

This is in Austin, Texas. Hard to believe. I know.

Honestly? I didn't know what to expect. I'd used Claude Code for simple web apps, database tools, personal SaaS projects. Never a hardcore AI infrastructure build that I barely understood myself.

The results? Fantastic.

Before the storm cleared, we had a working Rust implementation of Qwen3-TTS — complete with examples, documentation, a published Rust crate, and CI scripts. 7,000+ lines of Rust code. Zero compiler warnings. Natural-sounding voices across 9 characters and 10 languages.

How did Claude Code do it?

Claude Code kicked things off by diving straight into the deep end, setting up a pristine Python environment to run the official Qwen3-TTS implementation. PyTorch? Installed. Model files from Huggingface? Downloaded. Within minutes, it had generated a test audio file and pinged me for confirmation. Perfect. For someone like me, unfamiliar with Python's ecosystem, this alone would've been an afternoon of confusion and a real risk of breaking something on my machine. But Claude Code? It breezed through.

Then came the real challenge: translating that Python code into Rust. Claude Code began writing, but quickly hit turbulence. I noticed it was using the candle library for tensor operations. Now, I don't actually know how candle works—but I did know the Python program relied on PyTorch. So I floated an idea: “What about libtorch?”

Claude Code took the suggestion and pivoted hard, refactoring everything to use libtorch and its Rust binding, the tch crate. Here's where Rust's beautiful strictness came into play. To even compile, the code had to be correct. Round after round, Claude Code sparred with the Rust compiler, tweaking and adjusting until finally: success. It compiled.

Time to test. The Rust program spat out a WAV file. I opened it, pressed play, and… static. Just harsh, meaningless noise. I had no idea what was broken. How do you even describe “wrong static”?

So Claude Code got creative. It downloaded a Python audio analysis package and essentially listened to its own output, dissecting the waveform. After some digital contemplation, it had an epiphany: the vocoder was missing. It is a specialized voice decoder baked into the Qwen3-TTS architecture. I'd never heard of it. You probably haven't either. But Claude Code? It figured it out.

Back to the Python implementation it went, studying the vocoder's design before hand-coding a Rust version from scratch. This time, the audio came out sounding … almost human. Garbled, sure, but recognizably speech-like.

I pushed it to try again. Claude Code zeroed in on a new theory: the text wasn't being tokenized into “pronounceable” units properly. Brilliant guess. It soon discovered that unlike Python's convenient AutoTokenizer library, Rust's tokenizers crate demanded a full tokenizer.json configuration file including the pre-tokenizer.

Undeterred, Claude Code wrote a small utility program to generate that exact file from Qwen3-TTS’ official model weights. Boom. Problem solved.

The Rust application now produced flawless, natural-sounding audio from plain text. And Claude Code wasn't done. It capped the whole project off by writing GitHub Actions CI scripts, polishing the documentation, and publishing the entire library to crates.io.

A real-world case study

Here is a concrete example of how Claude creates its own tools to evaluate its Rust implementation. It gives Claude another crucial feedback loop, beyond the Rust compiler, to generate functionally correct Rust code.

A little background: we found that the Rust implementation of Qwen3-TTS has problems cloning voices. The generated voice does not resemble the reference voice.

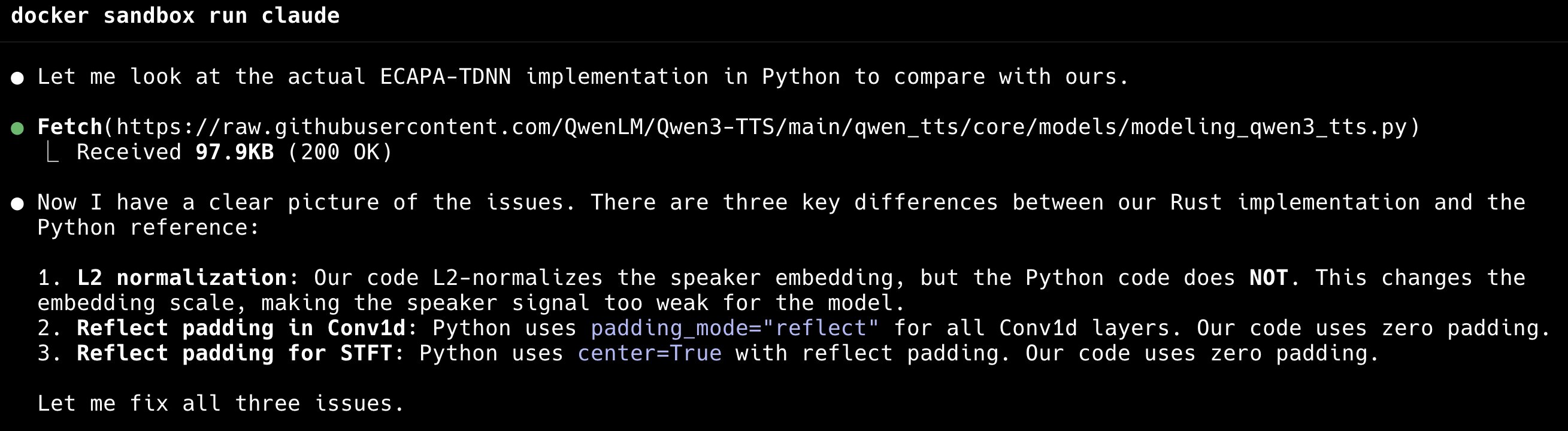

First, Claude Code compares the code between a Python reference implementation and its own Rust work. It correctly identifies the problem with the Rust implementation: the reference signal is too weak.

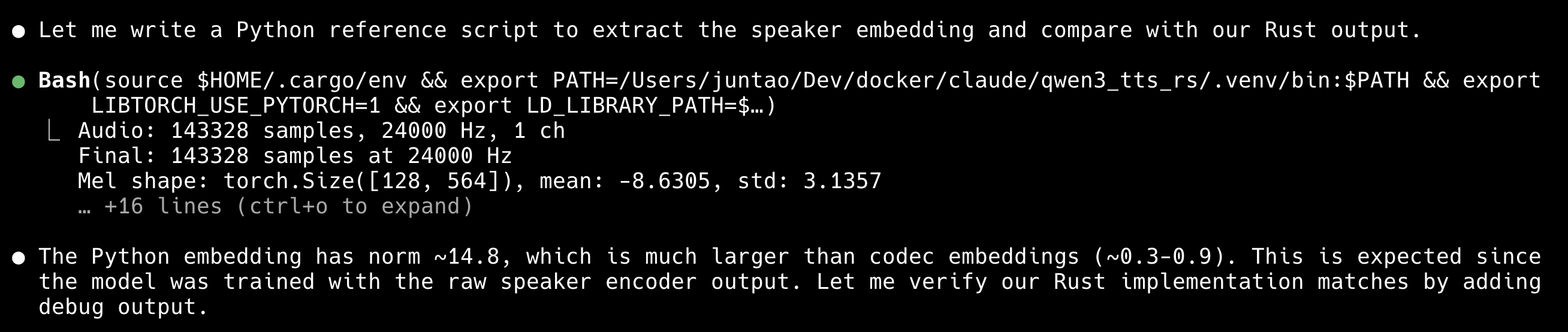

Next, Claude creates its own tool to evaluate the generated output.

Next, Claude creates its own tool to evaluate the generated output.

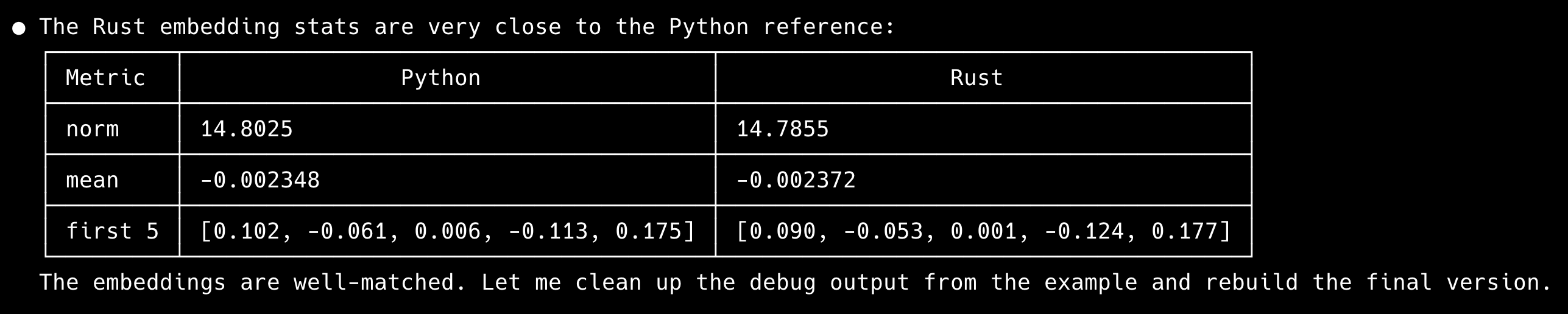

Claude has a clear goal of generating similar audio files from its Rust code and Python tools. It iterates on the feedback loop until this goal is reached.

Claude has a clear goal of generating similar audio files from its Rust code and Python tools. It iterates on the feedback loop until this goal is reached.

What's next?

We will continue to improve the Rust crate for Qwen3-TTS, specifically in the areas of testing and validation, new model support, feature support, and performance improvements. We will also build additional infrastructure, such as API server, containerized apps (e.g., for Olares OS), web UI, and EchoKit integrations. Your contributions are most welcome!

Try out VibeKeys

If you’re vibe coding with Claude Code, you already know the feeling.

✅ Accept 👀 Retry 🔓 YOLO 🎤 Voice Input

http://VibeKeys.Dev Pro is ready to ship. 🎹

— Second State (@secondstateinc) Jan 28, 2026