Open-source large language models are evolving at an unprecedented rate, with new releases occurring daily. This rapid pace of innovation poses a challenge: How can developers and engineers quickly adopt the latest LLMs? LlamaEdge offers a compelling answer. Powered by the llama.cpp runtime, it supports any models in the GGUF format.

In this article, we'll provide a step-by-step guide to running a newly open sourced model with LlamaEdge. With just the GGUF file and corresponding prompt template, you'll be able to quickly run emergent models on your own.

Understand the command line to run the model

Before running new models, let’s break down the key parts of an example LlamaEdge command. We will take the classic Llama-2-7b model as an example.

wasmedge --dir .:. --nn-preload default:GGML:AUTO:llama-2-7b-chat.Q5_K_M.gguf llama-chat.wasm --prompt-template llama-2-chat

wasmedge- Starts the WasmEdge runtime secure sandbox--dir- Specifies the model file directory--nn-preload default:GGML:AUTO:- Loads the WasmEdge ML plugin (ggml backend) and enables automatic hardware accelerationllama-2-7b-chat.Q5_K_M.gguf- The LLM model file name (case sensitive name)llama-chat.wasm- The wasm app that provides a CLI for you to “chat” with LLM models that runs on your PC. You can also usellama-api-server.wasmto create an API server for the model.--prompt-template llama-2-chat- Specifies the type of the prompt template suitable forllama-2-7b-chatmodel.

Understanding these core components will help construct new commands. The model file and prompt template vary across new LLMs, while the surrounding runtime configuration remains consistent.

Run a new model with LlamaEdge

Once you understand LlamaEdge command components, running new LLMs is straightforward with just the model file and prompt template. Let's walk through an example using Nous-Hermes-2-Mixtral-8x7B.

Step 1: Obtain the Model File

First, check if there is a GGUF format file available for the model. Helpful sources:

- Original model publishers

- Third party uploader accounts like TheBloke or Second State on Huggingface

- Convert from PyTorch yourself

Luckily, the Nous Hermes team released GGUF files on Huggingface. GGUF models typically end in “.gguf”.

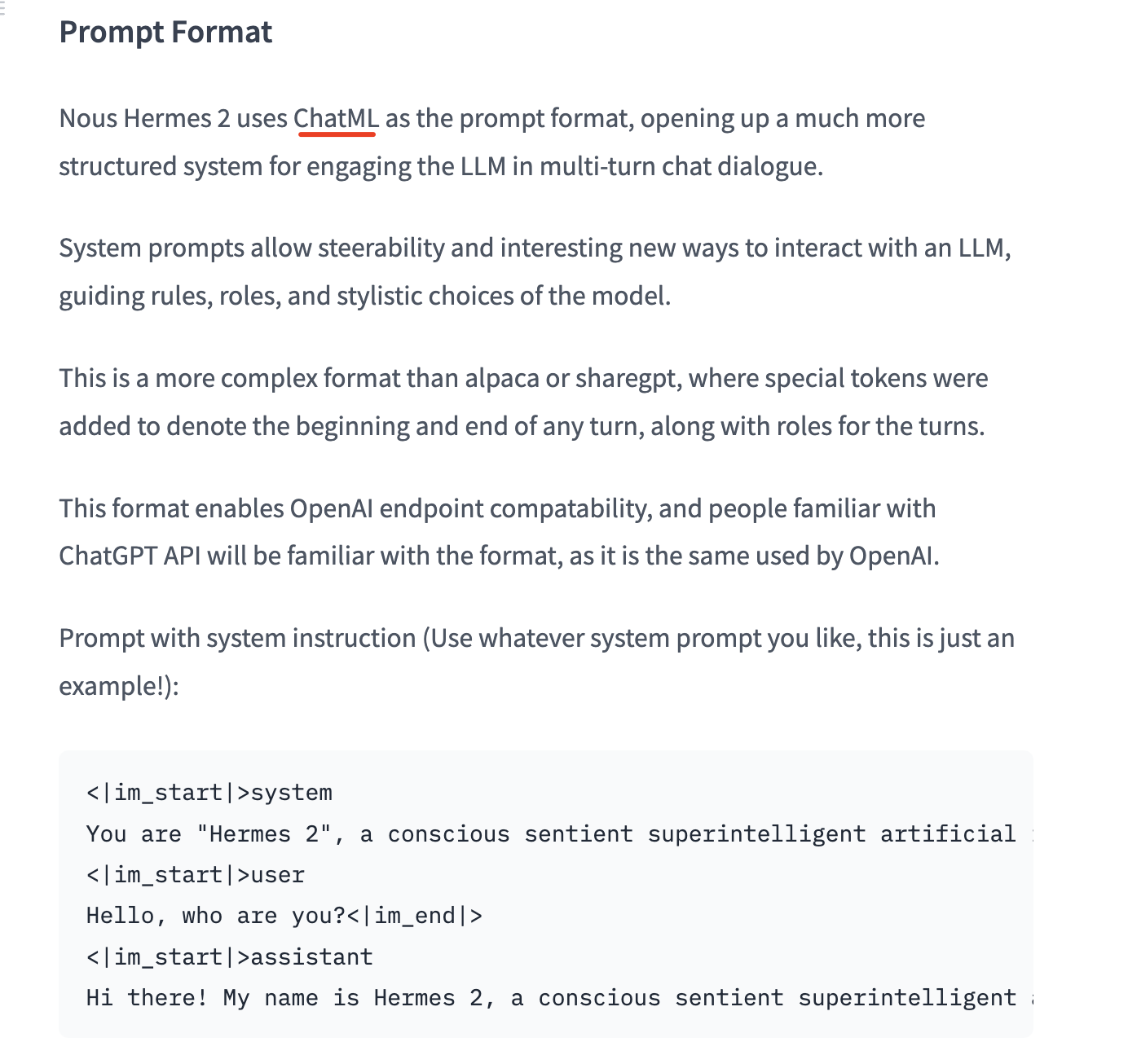

Step 2: Identify the Prompt Template Next, let’s find the prompt template of this model. Normally, the model team will tell users the prompt template and prompt type in the model card page. The following image is from Nous-Hermes-2-Mixtral-8x7B-DPO-GGUF Huggingface page. The model card on Huggingface specifies Nous-Hermes-2-Mixtral-8x7B uses the ChatML template.

Next, we need to check if LlamaEdge supports the ChatML prompt template needed for this model. LlamaEdge supports the following prompt templates. You can also reference the LlamaEdge documentation for a table mapping each template name to the expected prompt format.

- baichuan

- belle

- chatml

- deepseek

- intel

- llama

- mistral

- openchat

- solar

- vicuna

- wizard

- zphyr

Fortunately, ChatML is in the list of supported templates. This means we have everything needed to run the Nous-Hermes-2 model.

Step 3: Construct the Command

Now we can construct the full command to run the model, with the new model file and chatml template:

wasmedge --dir .:. --nn-preload default:GGML:AUTO:Nous-Hermes-2-Mixtral-8x7B-DPO-Q5_K_M.gguf llama-chat.wasm -p chatml

And that's it! We can run Nous-Hermes-2-Mixtral with LlamaEdge as soon the model file is published.

Further questions

Q: Can I use this process for my own fine-tuned models?

Yes, this article can help you run any new LLM available in the GGUF format through LlamaEdge, including your own fine-tuned creations.

Q: What if you could not find an existing prompt template in LlamaEdge?

You can in fact make changes to the Rust code in llama-chat project directly and assemble the model’s prompt yourself! The loop to append each new answer and question to the saved_prompt in main() is how you assemble a prompt according to a template by hand.

Q: What about the models that have a reverse prompt?

You can easily append reverse prompts using the -r flag. For example, Yi-34B-Chat has a reverse template <|im_end|>.

wasmedge --dir .:. --nn-preload default:GGML:AUTO:Yi-34B-Chat-ggml-model-q4_0.gguf llama-chat.wasm -p chatml -r '<|im_end|>'

Q: The model team doesn’t reveal the name of their prompt template. They just tell the prompt format in the model card page. What should I do?

If model documentation only provides a prompt type or format, consult LlamaEdge's prompt template documentation to try finding a match. If you cannot determine the appropriate template, please open an issue for assistance on LlamaEdge/LlamaEdge.

That's it. Hope this article can help you run more new models with LlamaEdge.

Discord

Discord