By apepkuss

In the article OpenVINO Inferencing using WasmEdge WASI-NN, we showed how to use OpenVINO to build a machine learning inference task for road segmentation. In the process, we noticed two interesting parts that could be further improved:

- wasi-nn crate is used in the example, which provides a Rust interface implementation for the

WASI-NNproposal, greatly reducing the Process complexity in using Rust language to build machine learning tasks based on WebAssembly. However, the interface provided bywasi-nn crateisunsafe, which serves better as a low-level API for building higher-level libraries. Therefore, we can create a library that provides a safe interface based on thewasi-nn crate. - When preprocessing the input image, we use

opencv crate. However, becauseopencv cratecannot be compiled into a wasm module, it has to separate the image preprocessing module and implement it as a separate project.

For the above two, we try to make preliminary improvements:

- Drawing on some attempts of Rust and WebAssembly community developers, we abstract and safely encapsulate the unsafe interface defined in

wasi-nn crate, and build [wasmedge-nn crate](https://crates.io/crates /wasmedge-nn) prototype. Next up we will demonstrate how to replace thewasi-nn cratewith thewasmedge-nn crateto rebuild the wasm inference module used in the previous article. image crate, one of the well-known image processing libraries in the Rust community, provides the basic capabilities of image preprocessing we need; In addition, since it is a native Rust implementation, our needed image processing library built based on this library can be compiled as a wasm module.

So now we use the same road segmentation example to demonstrate our improvement in detail.

Building wasm inference module based on wasmedge-nn

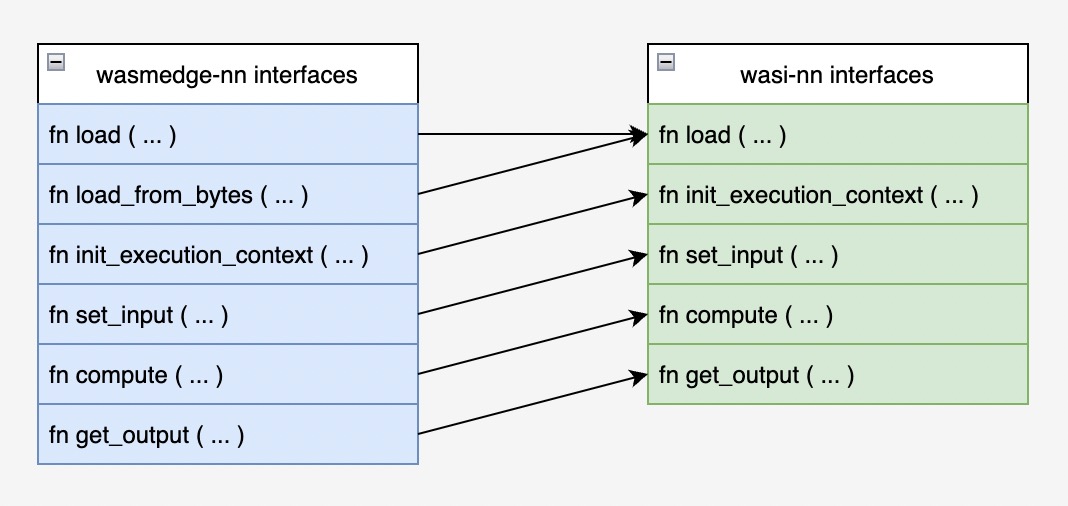

In the previous article, we have used the five main interfaces defined in the wasi-nn crate, which correspond to the interfaces in the WASI-NN proposal. Let's take a look at the improved interface. In the figure below, the blue box is the interface defined in the nn module of the wasmedge-nn crate we want to use. The green box is the interface defined in the corresponding wasi-nn crate, and the arrows show the mapping relationship between them. Regarding the design details of wasmedge-nn crate, read the source code first if interested. We will discuss it in another article later, so no more elaboration here.

Building wasm inference module based on wasmedge-nn

Next, we will show with code how to use the interface and related data structures provided by wasmedge-nn to re-implement the wasm inference module described in the previous article.

The following sample code is a wasm inference module rebuilt using the secure interface provided by the wasmedge-nn crate. Through the comments in the code, it can be easily found that the calling order of the interface is the same as the calling order using the wasi-nn interface; and the most obvious difference is that because of the security interface defined in wasmedge-nn , so the word unsafe no longer appears in the example code. As explained in previous article, the interface calling order shown in the sample code can be seen as a template: if a model is replaced to complete a new inference task, the code below requires almost no changes. You can try other models if interested. The full code for the example below can be found here.

use std::env;

use wasmedge_nn::{

cv::image_to_bytes,

nn::{ctx::WasiNnCtx, Dtype, ExecutionTarget, GraphEncoding, Tensor},

};

fn main() -> Result<(), Box<dyn std::error::Error>> {

let args: Vec<String> = env::args().collect();

let model_xml_name: &str = &args[1];

let model_bin_name: &str = &args[2];

let image_name: &str = &args[3];

// Load image file and convert it into a sequence of bytes

println!("Load image file and convert it into tensor ...");

let bytes = image_to_bytes(image_name.to_string(), 512, 896, Dtype::F32)?;

// Create a Tensor instance, including data, dimensions, types, etc.

let tensor = Tensor {

dimensions: &[1, 3, 512, 896],

r#type: Dtype::F32.into(),

data: bytes.as_slice(),

};

// Create a WASI-NN Context instance

let mut ctx = WasiNnCtx::new()?;

// Load model files and other configuration information required for inference

println!("Load model files ...");

let graph_id = ctx.load(

model_xml_name,

model_bin_name,

GraphEncoding::Openvino,

ExecutionTarget::CPU,

)?;

// Initialize execution environment context

println!("initialize the execution context ...");

let exec_context_id = ctx.init_execution_context(graph_id)?;

// Provide input to the execution environment

println!("Set input tensor ...");

ctx.set_input(exec_context_id, 0, tensor)?;

// Execute inference compute

println!("Do inference ...");

ctx.compute(exec_context_id)?;

// Extract inference compute result

println!("Extract result ...");

let mut out_buffer = vec![0u8; 1 * 4 * 512 * 896 * 4];

ctx.get_output(exec_context_id, 0, out_buffer.as_mut_slice())?;

// Export the result to the specified binary file

println!("Dump result ...");

dump(

"wasinn-openvino-inference-output-1x4x512x896xf32.tensor",

out_buffer.as_slice(),

)?;

Ok(())

}

It should be noted here that the final exported .tensor binary file is used for subsequent visualization of the inference result data. Since the sample code is executed through the command line, in some environments (such as Docker), the inference results cannot be displayed directly through API calls, so here we just export the inference results. For other types of inference tasks, such as using classification models, when visual display is not required, you can consider printing the classification results directly without exporting to a file. For reference, here we provide a piece of Python code (quoted from WasmEdge-WASINN-examples/openvino-road-segmentation-adas), visualize the inference result data by reading the exported .tensor file.

import matplotlib.pyplot as plt

import numpy as np

# Read the binary file holding the inference results and convert it to the original dimension

data = np.fromfile("wasinn-openvino-inference-output-1x4x512x896xf32.tensor", dtype=np.float32)

print(f"data size: {data.size}")

resized_data = np.resize(data, (1,4,512,896))

print(f"resized_data: {resized_data.shape}, dtype: {resized_data.dtype}")

# Prepare data for visualization

segmentation_mask = np.argmax(resized_data, axis=1)

print(f"segmentation_mask shape: {segmentation_mask.shape}, dtype: {segmentation_mask.dtype}")

# Draw and show

plt.imshow(segmentation_mask[0])

Image preprocessing functions based on image crate

In addition to providing a secure interface for performing inference tasks, through the cv module, the wasmedge-nn crate provides a basic image preprocessing function image_to_bytes. The implementation of this function draws on the design of the image2tensor open source project, which is mainly used to convert the input image into the squence of bytes that meet the requirements of the inference task, and in subsequent steps further build Tensor variables as input to the inference module interface function. Since the current backend only supports OpenVINO, the image processing requirements are relatively simple, so this cv module only contains this image preprocessing function.

use image::{self, io::Reader, DynamicImage};

// Convert the image file to a specific size and convert it to a sequence of bytes of the specified type

pub fn image_to_bytes(

path: impl AsRef<Path>,

nheight: u32,

nwidth: u32,

dtype: Dtype,

) -> CvResult<Vec<u8>> {

// Read image

let pixels = Reader::open(path.as_ref())?.decode()?;

// Convert to specific types

let dyn_img: DynamicImage = pixels.resize_exact(nwidth, nheight, image::imageops::Triangle);

// Convert to BGR format

let bgr_img = dyn_img.to_bgr8();

// onvert to specific type of sequence of bytes

let raw_u8_arr: &[u8] = &bgr_img.as_raw()[..];

let u8_arr = match dtype {

Dtype::F32 => {

// Create an array to hold the f32 value of those pixels

let bytes_required = raw_u8_arr.len() * 4;

let mut u8_arr: Vec<u8> = vec![0; bytes_required];

for i in 0..raw_u8_arr.len() {

// Read the number as a f32 and break it into u8 bytes

let u8_f32: f32 = raw_u8_arr[i] as f32;

let u8_bytes = u8_f32.to_ne_bytes();

for j in 0..4 {

u8_arr[(i * 4) + j] = u8_bytes[j];

}

}

u8_arr

}

Dtype::U8 => raw_u8_arr.to_vec(),

};

Ok(u8_arr)

}

With the secure wasmedge-nn crate, and an image processing library that supports compiling OpenCV to Wasm, AI inference with Rust and WebAssembly is easy. Then just follow the instructions to run the OpenVINO model and it is done.

Summary

wasi-nn crate provides Rust developers with a basic low-level interface, which greatly reduces the complexity of interface calls when using the built-in WASI-NN support of WasmEdge Runtime; On this basis, by providing encapsulated safe interfaces, wasmedge-nn crate better defines the user interface of inference tasks; at the same time, through further abstraction, the front-end interface for inference tasks and the back-end interface for inference engines are decoupled, so as to achieve loose coupling between front and back ends.

In addition, the image preprocessing function based on image crate provided by the cv module allows the image preprocessing module and the inference calculation module to be compiled in the same Wasm module, so as to realize the input tensor from the original image to the inference task , then to the inference calculation, and finally to the pipeline of the calculation result export.

Details about the wasmedge-nn crate will be covered in the next article. And go to wasmedge-nn GitHub repo to learn more.

Any feedbacks and suggestions from developers and researchers interested in WasmEdge + AI are high appreciated; You are also welcome to share your practical experience and stories to our WasmEdge-WASINN-examples open source project. Thanks!